Counterfactual medical image generation enables clinicians to explore clinical hypotheses, such as predicting disease progression, facilitating their decision-making. While existing methods can generate visually plausible images from disease progression prompts, they produce silent predictions that lack interpretation to verify how the generation reflects the hypothesized progression — a critical gap for medical applications that require traceable reasoning.

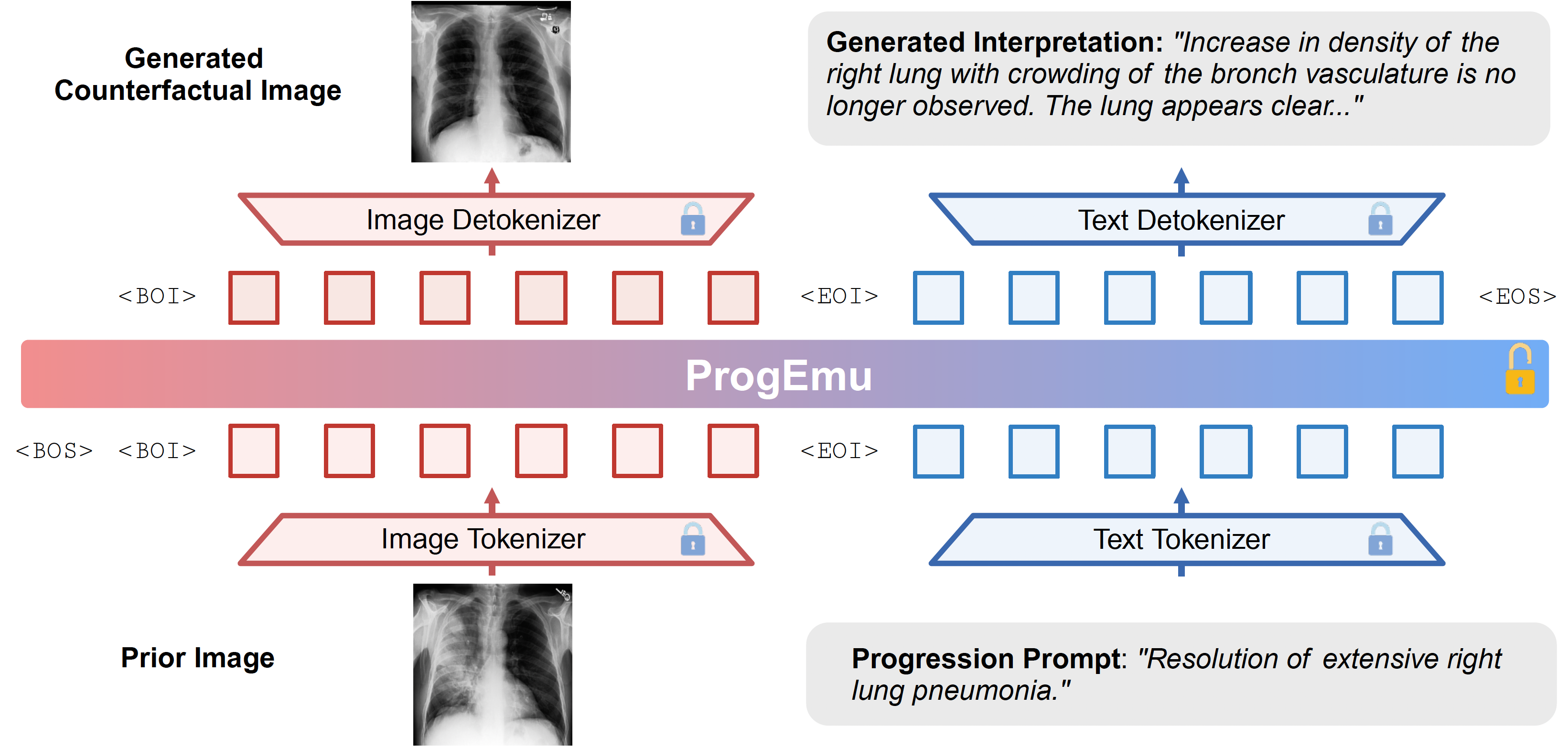

In this paper, we propose Interpretable Counterfactual Generation (ICG), a novel task requiring the joint generation of counterfactual images that reflect the clinical hypothesis and interpretation texts that outline the visual changes induced by the hypothesis. To enable ICG, we present ICG-CXR, the first dataset pairing longitudinal medical images with hypothetical progression prompts and textual interpretations. We further introduce ProgEmu, an autoregressive model that unifies the generation of counterfactual images and textual interpretations.

We demonstrate the superiority of ProgEmu in generating progression-aligned counterfactuals and interpretations, showing significant potential in enhancing clinical decision support and medical education.

There have been many outstanding works that inspire and relate to our research.

In the field of medical counterfactual generation, early approaches like Latent Shift enable chest X-ray (CXR) generation by perturbing latent representations to exaggerate/curtail prediction-driven features. More recently, RoentGen introduces a domain-adapted latent diffusion model to generate high-quality CXRs from text description — a paradigm shift that brings greater flexibility. BiomedJourney and PIE reframe counterfactual generation as an image editing problem and adapt diffusion models to precisely modify specific pathological attributes. EHRXDiff predicts future CXRs by combining previous CXRs with subsequent medical events. Further, CXR-IRGen explores the integration of a diffusion model and a language model, moving beyond image editing to jointly generate CXRs and radiology reports.

From a broader perspective, there is a growing trend toward unifying multimodal generation and understanding within one single AI system, such as Chameleon and Emu3, paving the way for more generalizable and scalable AI models. These advancements hold immense potential not only for counterfactual medical generation but also for the development of more robust, generalizable, and clinically valuable AI-driven imaging solutions.

@article{ma2025towards,

author = {Ma, Chenglong and Ji, Yuanfeng and Ye, Jin and Zhang, Lu and Chen, Ying and Li, Tianbin and Li, Mingjie and He, Junjun and Shan, Hongming},

title = {Towards Interpretable Counterfactual Generation via Multimodal Autoregression},

journal = {arXiv preprint arXiv:2503.23149},

year = {2025},

}